Agentic Modernization: The 2026 Playbook for Mainframe-to-Cloud

India is the custodian of the world's technical debt. For decades, GCCs (Global Capability Centers) in Bengaluru, Pune, and Hyderabad have been hired to maintain the unmaintainable—millions of lines of COBOL, JCL, and Fortran that run the global banking and insurance sectors.

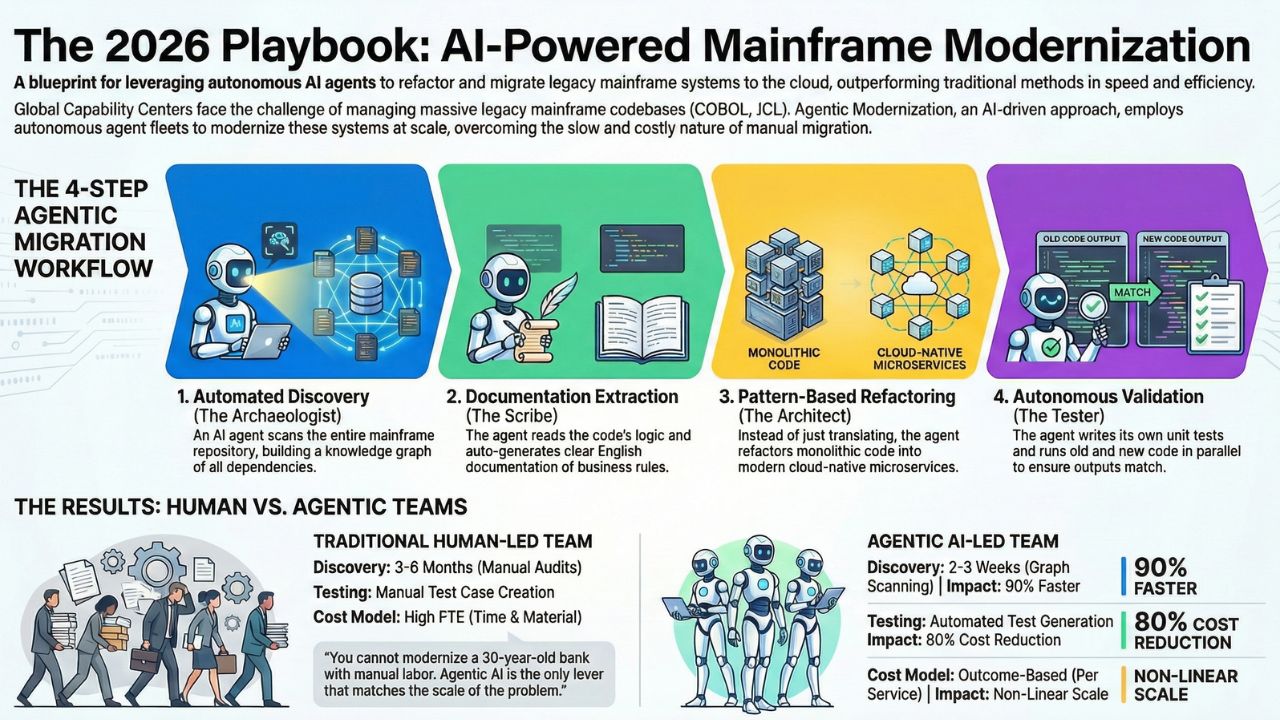

The traditional "Lift and Shift" model has failed. It is too slow, too risky, and too expensive. The solution for GCC 4.0 is Agentic Modernization.

We are no longer using humans to read code and rewrite it. we are using autonomous AI agent fleets to ingest legacy repositories, map dependencies, and refactor code into cloud-native microservices. This is the only way to clear the backlog before 2030.

The Workflow: How Agents Modernize Legacy Systems

Agentic modernization is not a "Copilot" suggesting a line of code. It is a multi-agent workflow that acts as an autonomous migration factory. Here is the 4-step playbook for 2026:

-

Step 1: Automated Discovery (The Archaeologist)

Before you change code, you must understand it. An "Ingestion Agent" scans the mainframe repository. It builds a knowledge graph of every variable, dependency, and database call. It identifies "dead code" instantly—something humans take months to do. -

Step 2: Documentation Extraction (The Scribe)

The biggest risk in modernization is "Tribal Knowledge"—the developer who wrote the code retired in 2015. Agents read the logic and generate English documentation, explaining what the business rule is (e.g., "If credit score < 600, reject loan"). -

Step 3: Pattern-Based Refactoring (The Architect)

The agent doesn't just translate syntax; it refactors structure. It converts monolithic COBOL procedures into isolated Java/Python classes or microservices, stripping away the technical debt of the last 20 years. -

Step 4: Autonomous Validation (The Tester)

This is the game changer. The agent writes its own unit tests. It runs the old code and the new code side-by-side (parallel run) to ensure the outputs match exactly.

Metrics: Human vs. Agentic Migration

Why switch? The math is undeniable. Agentic workflows compress the most labor-intensive parts of the migration lifecycle.

| Phase | Human-Led Team (Traditional) | Agentic Team (GCC 4.0) | Impact |

|---|---|---|---|

| Discovery | 3-6 Months (Manual Audits) | 2-3 Weeks (Graph Scanning) | 90% Faster |

| Code Conversion | Slow, error-prone manual typing | Instant generation, review-based | 60% Faster |

| Testing | Manual test case creation | Automated test generation | 80% Cost Reduction |

| Cost Model | High FTE (Time & Material) | Outcome Based (Per Line/Service) | Non-Linear Scale |

Strategic Value for the GCC

For the GCC Head, this is more than a technical upgrade; it is a value realization strategy. By deploying Agentic Modernization, the GCC transitions from a "Cost Center" (keeping the lights on) to an "Innovation Hub" (accelerating the cloud journey).

Next Steps for Technical Leaders

Do not attempt to boil the ocean. Start small.

1. The Pilot: specific a low-risk, non-critical module (e.g., a reporting batch job).

2. The Stack: Evaluate tools like IBM watsonx Code Assistant or AWS Mainframe Modernization (with Q).

3. The Team: Re-skill your COBOL veterans to become "Migration Architects" who review the Agent's work.

Back to Pillar: The Modern GCC 4.0 Explore the full Intelligence Arbitrage Hub Related: The Operational Structure Who manages these agents? Read "Building the AI Control Tower"

FAQ: Agentic Modernization

Yes. Modern Agentic workflows don't just "translate" line-by-line; they understand business logic. They can refactor monolithic COBOL procedures into microservices-ready Java or Python code with high accuracy, though human review is still required.

Benchmarks suggest a 40-60% reduction in total project timeline. The biggest gains are in "Discovery" (reading the code) and "Testing" (writing test cases), which agents can do instantly compared to weeks for humans.

No. It shifts the developer's role from "Translator" to "Reviewer." Developers are needed to validate the architecture, review the agent's code for security nuances, and handle complex edge cases that agents might miss.