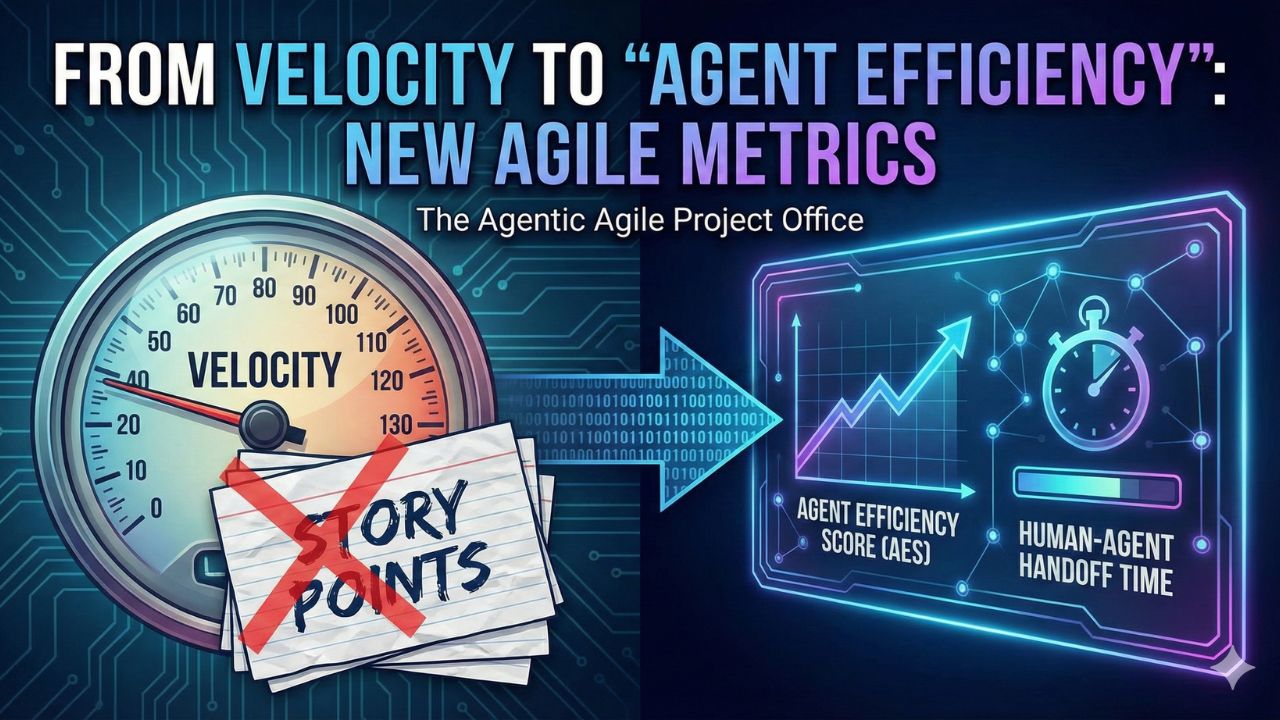

From Velocity to "Agent Efficiency": New Agile Metrics for 2026

In 2024, a "3-point" story meant roughly half a day of focused human effort. In 2026, an AI agent can write the code for that same story in 4 seconds. So, did your team’s velocity just jump from 50 to 5,000? No.

If you are still measuring "Velocity" or "Story Points," you are tracking a vanity metric that has been broken by automation. When AI generates code instantly but introduces subtle logic bugs that take humans days to debug, "speed of generation" becomes irrelevant.

The future of delivery measurement isn't about how fast you start; it's about the friction between your silicon workforce (Agents) and your carbon workforce (Humans). We are introducing the two critical KPIs for the Agentic Agile Office: Agent Efficiency Score (AES) and Human-Agent Handoff Time.

Back to Hub: The Agentic Agile Project Office Explore all guides, tools, and strategies for the future of delivery.Metric 1: Agent Efficiency Score (AES)

Definition: AES measures the autonomy and reliability of a specific AI Agent within a workflow. It answers the question: "Is this agent actually saving us work, or is it just creating noise?"

In the early days of AI (2023-2024), we celebrated "Lines of Code Generated." That was a mistake. Today, we measure successful outcomes.

The Formula

(Total Tasks Assigned + (Human Interventions × Complexity Penalty))

How to Read the Score

- High AES (80-100): The agent works like a "Senior Developer." It takes a ticket, executes the task, passes unit tests, and requires zero human edits.

- Low AES (<50): The agent is a "Junior Intern." It generates output quickly, but a human has to rewrite 60% of it. The agent is costing you more in review time than it saves in drafting time.

Metric 2: Human-Agent Handoff Time (The "Context Loading" Tax)

Definition: The time elapsed between an AI Agent signaling "I am stuck" (or "Please Review") and a human successfully resuming the work. This is the silent killer of productivity in 2026.

The Scenario

- 09:00 AM: An AI Agent starts refactoring a legacy API.

- 09:05 AM: The Agent hits an ambiguity in the documentation and pauses, tagging a Senior Engineer.

- 02:00 PM: The Senior Engineer sees the notification.

- 02:30 PM: The Engineer spends 30 minutes reading the logs to understand what the Agent was trying to do.

The Handoff Time here is 5.5 hours.

Why It Matters

In traditional Agile, we measured "Cycle Time." In Agentic Agile, "Machine Time" is near-zero. The entirety of your delivery delay now lives in the Handoff. High handoff times indicate that your AI Orchestrator has failed to design a good notification system, or that the AI is not summarizing its state effectively for the human.

The Goal: "Warm Handoffs"

A "Warm Handoff" occurs when the AI generates a Context Summary before pausing.

- Bad Handoff: "Error 404 on line 32. Human help needed."

- Warm Handoff: "I successfully migrated the database schema, but the User Auth table has a conflict. I have paused the rollback. Here is a 3-bullet summary of the conflict for your decision."

The New 2026 Dashboard

Forget the Burndown Chart. Your Project Office dashboard should now look like this:

| Old Metric (Deprecate) | New Metric (Adopt) | Why? |

|---|---|---|

| Velocity | Throughput per Dollar | Speed is infinite with AI; cost (API tokens) is the new constraint. |

| Cycle Time | Handoff Friction | Code generation is instant; human review is the bottleneck. |

| Defect Density | Reversion Rate | How often do humans reject the AI's Pull Request? |

| Story Points | AES (Agent Efficiency) | Measures the quality of the automation, not the effort of the human. |

FAQ: Transitioning Your Metrics

A: If your team uses Copilot or Cursor for >50% of their code, yes. Story points estimate human effort. If the human isn't writing the code, the estimate is a lie. Switch to Task Count or T-shirt Sizing for the human-review portion only.

A: Modern orchestration tools (like Jira Intelligence or Linear) now have API hooks that track "Agent Rejection Rates." You can script a simple dashboard that counts how many AI-generated PRs were merged without changes vs. those that required edits.

A: No, AES judges the Agent, not the Human. A low AES means the Human is doing too much work to babysit the tool. It is a signal to upgrade the tool, not blame the developer.

Sources & References

- DORA Metrics 2025 Report: "The shift from 'Lead Time for Changes' to 'AI-Assisted Throughput'."

- Gartner (2025): "Maverick Research: The Death of the Story Point."

- Journal of Systems & Software: "Defining Agent Efficiency: A taxonomy for human-bot collaborative coding."

- McKinsey Digital: "Developer Productivity in the Age of Generative AI: Beyond the hype."