The Agile Team’s AI Usage Policy: A Template for Leaders

Shadow AI is the single biggest risk to engineering integrity today. Your developers are likely pasting error logs, database schemas, and even proprietary algorithms into ChatGPT to debug them faster.

If you ban these tools, you slow down your team. If you ignore them, you leak IP. The solution is Governance, not Prohibition.

Below is a production-ready "Acceptable Use Policy" (AUP) designed for Agile teams. It uses a "Traffic Light Protocol" to make it easy for developers to know what they can and cannot do.

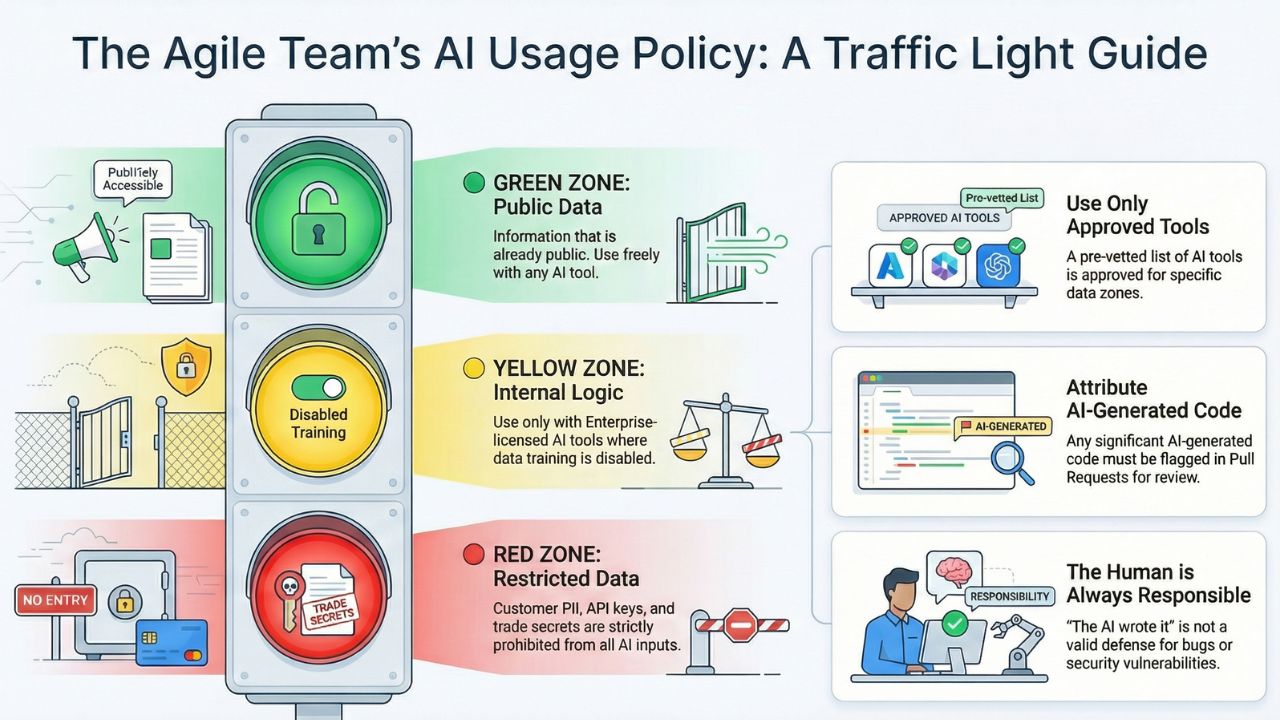

1. The "Traffic Light" Data Protocol

Before you copy the template, understand the logic. We classify data into three zones:

🟢 GREEN ZONE (Public Data)

Definition: Information already available on our public marketing website or open-source repositories.

Action: Can be used with any AI tool (ChatGPT Free, Claude, Gemini).

🟡 YELLOW ZONE (Internal Logic)

Definition: Non-sensitive business logic, generic utility functions, and internal memos.

Action: Can only be used with Enterprise-licensed tools (GitHub Copilot Enterprise) where data training is disabled.

🔴 RED ZONE (Restricted Data)

Definition: PII (Customer Names, Emails), Secrets (API Keys, Passwords), and Core IP (Trade Secret Algorithms).

Action: STRICTLY PROHIBITED from all AI inputs.

2. The Policy Template

Copy the text below into your internal Confluence or Notion page.

Policy: Generative AI Acceptable Use

Effective Date: [Today's Date]

Applies to: All Engineering & Product Staff

1. Purpose

To enable the use of AI tools for productivity while protecting [Company Name]'s Intellectual Property and Customer Data.

2. Approved Tool List

- GitHub Copilot (Enterprise): APPROVED for Green & Yellow data.

- ChatGPT (Free/Plus Personal): APPROVED for Green data ONLY.

- Unknown/Beta Tools: PROHIBITED until security review.

3. Code Attribution Rules

Any code generated by AI that exceeds 10 lines or complex logic must be tagged in the Pull Request comment:

"Generated by [Tool Name]. Verified by [Human Name]."

4. The "Human in the Loop" Mandate

You remain fully responsible for the code you commit. "The AI wrote it" is not a valid defense for bugs or security vulnerabilities. You must review every line of generated code.

3. Frequently Asked Questions (FAQ)

A: Shadow AI refers to the unsanctioned use of artificial intelligence tools by employees. For example, a developer pasting proprietary code into a personal ChatGPT account to fix a bug creates a data leak risk.

A: Banning AI entirely stifles innovation. A Traffic Light system (Green/Yellow/Red) allows safe experimentation with public data while strictly prohibiting the use of PII or Trade Secrets on open models.

A: Yes. The policy mandates that AI-generated code must be treated as "Open Source" in terms of risk. It requires human review to ensure no copyrighted material was inadvertently Hallucinated by the model.